Analysis

Low-Frequency Motion Control (LFMC) for robotic locomotion is based on the motivation that animals are able to exhibit agile and dynamic locomotion skills despite significant sensorimotor delays. These delays further limit their motion control frequency. In this study, we explore the possibility of robust and dynamic locomotion for robots with low-motion control frequency. We also investigate potential benefits of such an approach. For this, we compare motion control behavior at frequencies ranging from 5 Hz to 200 Hz.

Sensorimotor Delays in Animals

Biological systems are susceptible to neural sensory noise1 in addition to sensorimotor latencies2. These latencies correspond to Nerve Conduction, Electro-Mechanical and Force Generation delays. More et al. study the various factors of delays and present how these scale with respect to the mass of an animal3. For a 40 Kg animal, for example, the total sensing and actuation delays are estimated to be 67ms of which 30.4ms are required to process sensory feedback, generate an actuation signal, and deliver eletro-mechanical commands. The remaining delay corresponds to the ramp-up time for achieving peak muscle force.

Ashtiani et al.4 explore these latencies in biological systems and present an example in which a house cat, exhibiting a locomotion frequency of 5 Hz5, is sensor-blind for half its stance-phase suggesting that high-frequency feedback-based decision-making may not be critical for locomotion over challenging terrain. Ashtiani et al. further investigate this through a focus on high compliance of biological actuators and propose a mechanical design to realize actuation response similar to animal muscle-tendon units. A rich body of work has built on this motivation and have proposed bio-inspired system designs6 for smooth and compliant motion control response.

Low-Frequency Motion Control

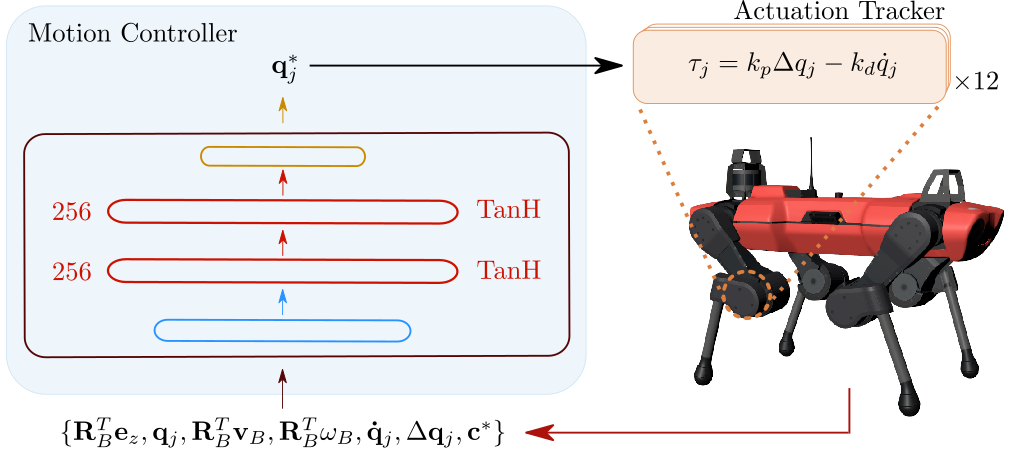

In contrast to existing works that investigate mechanical designs that allow animals to perform robust and agile locomotion, we consider the possibility of achieiving low-frequency motion control through a controller that is able to thoroughly utilize system capabilities. We therefore use deep reinforcement learning (RL) to train motion control policies that generate low-level desired joint position commands. These commands are then tracked by the actuators using an impedance controller. The figure below illustrates the control architecture utilized in this work.

The input to the motion controller comprises robot states and desired base velocity commands. We defer the reader to the manuscript for additional information. The motion controller maps the input to desired joint positions \(\mathbf{q}^{\ast}_j\) that are tracked by the on-board actuators. The ANYmal C robot, utilized in this work, contains 12 rotary joints.

Training Motion Control Policies

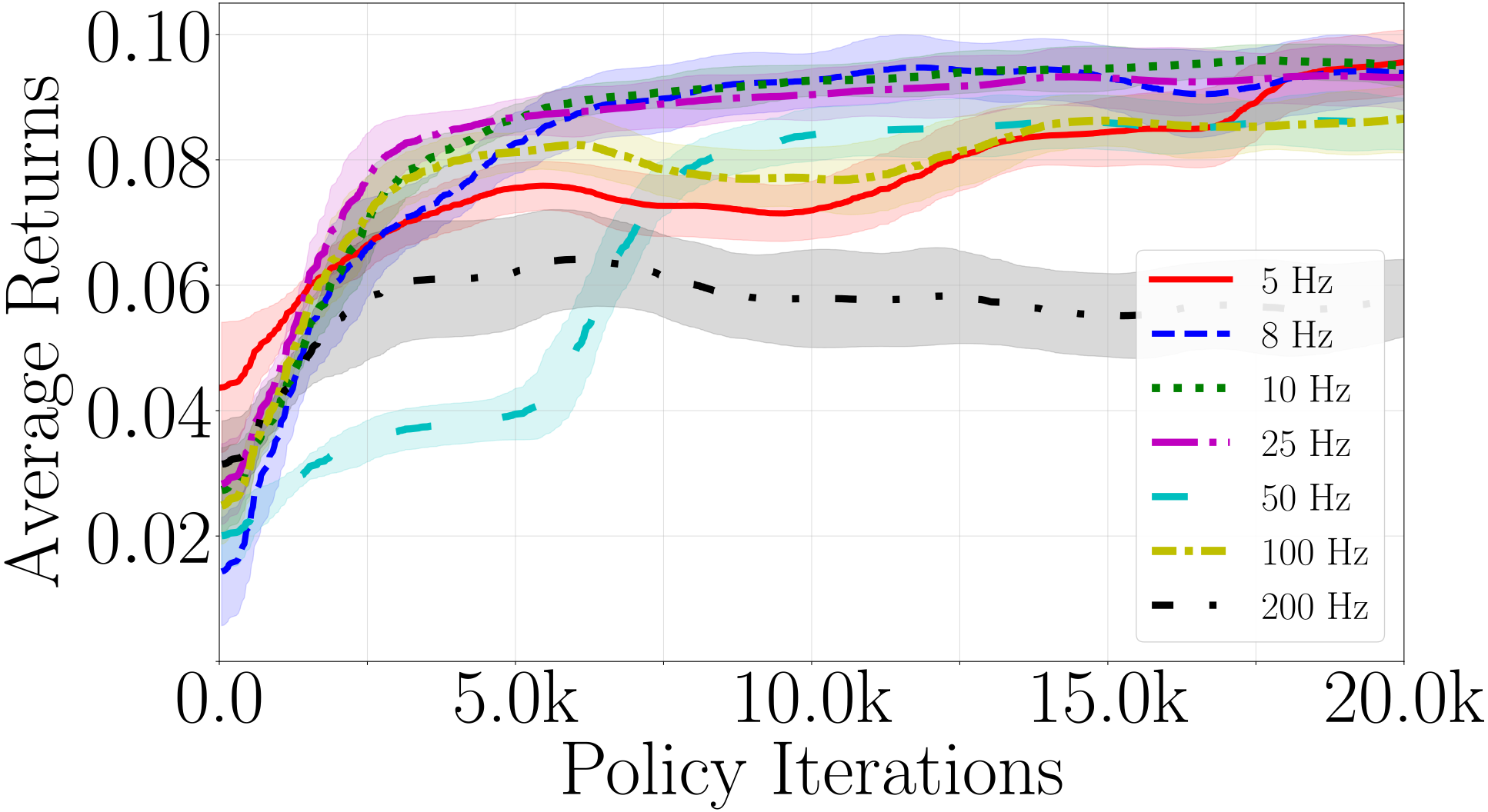

We use RL, specifically proximal policy optimization (PPO)7, to obtain motion control policies that enable a quadrupedal robot to track user-defined base velocity commands. Here, we investigate how different frequencies influence training.

The above figure shows the average reward that policies trained at different frequencies recieve for the duration of 20k steps. We observe that for LFMC policies, the convergence occurs faster than for motion control policies trained at 50 Hz or more. We additionally observed that LFMC policies were less sensitive to training hyperparameters. For a consistent analysis, however, we had to choose training hyperparameters that worked for each of the training frequencies. Nonetheless, it is important to highlight that, in our experience, training at low-frequencies is easier than training at high-frequencies. We discuss this in the following section.

We trained each of the motion control policies to account for scaling of rewards and hyper-parameters for changes in control time steps. This is described in the manuscript. The training configurations utilized for the comparaitive analysis described in the manuscript can be downloaded below.

5 Hz 8 Hz 10 Hz 25 Hz 50 Hz 100 Hz 200 Hz All

Note that, based on our setup, we observe poor locomotion behavior for number of environments less than 240. We therefore utilize a minimum of 240 environments during training. Additionally, we utilize a minimum of 8 steps in an episode per environment.

Motion Planning Hypothesis

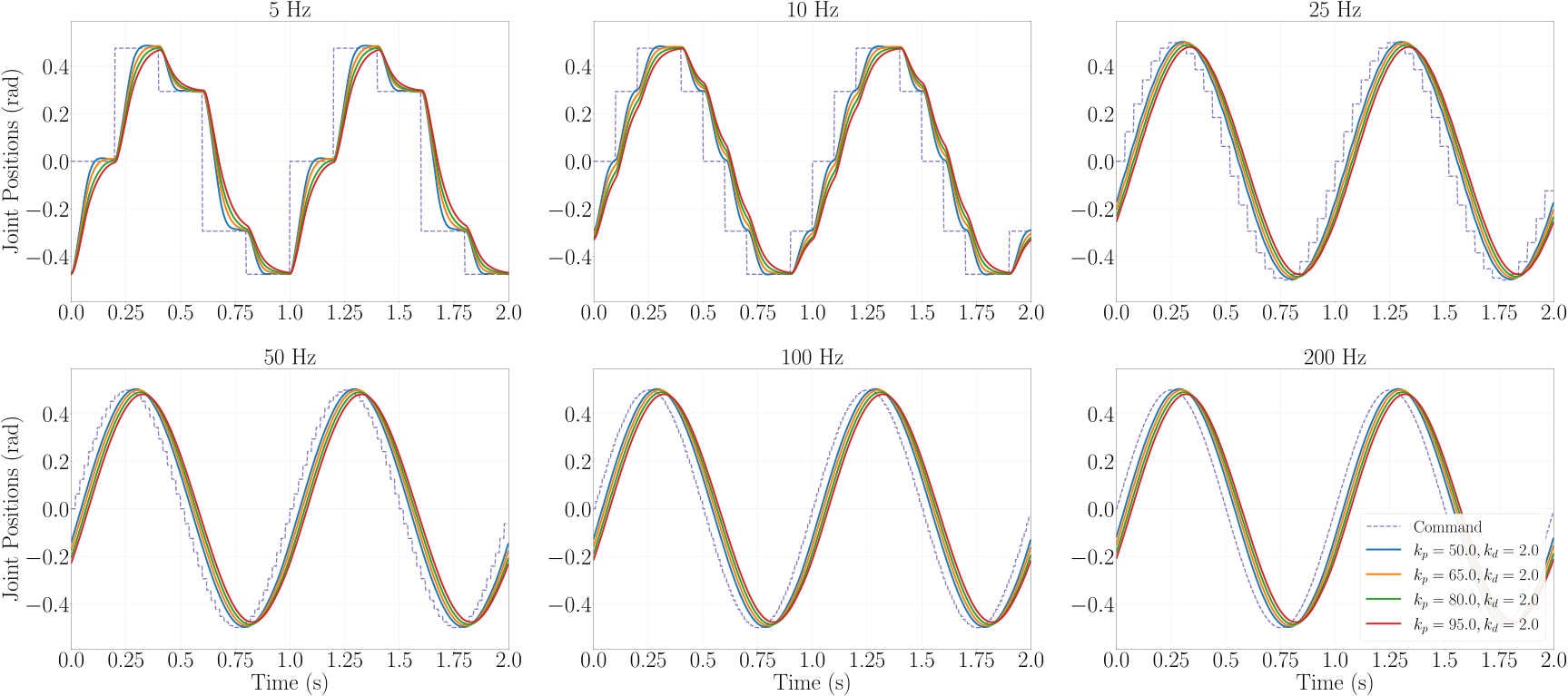

To develop an intuitive understanding of why low-frequency motion control is easier to train, we consider a toy example of a PD controller tracking sinusoidal set points updated at frequencies ranging from 5 Hz to 200 Hz. In this work, the impedance (or PD) controller is written as

where \(\boldsymbol{\tau}_j\) is the joint acutation torque, \(\Delta\mathbf{q}_j\) represents the joint position tracking error and \(\mathbf{\dot{q}}_j\) is the joint velocity. \(k_p\) and \(k_d\) represent the actuation tracking gains.

As shown in the figure above, for the 5 Hz update frequency, the joint trajectories converge to very similar states before a new set point is generated. For a 5 Hz controller, this implies the sensory readings at each update step are less effected by actuation tracking dynamics in comparison for higher update frequencies such as 200 Hz. For an effective control behavior, the 200 Hz motion controller is required to adapt to any possible variations in actuation dynamics implying that high-frequency motion control (HFMC) policies require observability of the actuation dynamics to be able to exhibit an adaptive behavior. In contrast, LFMC policies are robust to small variations in actuation dynamics.

This leads us to the hypothesis that LFMC policies can operate as planners while HFMC policies are required to operate as controllers. We refer to this as the Motion Planning Hypothesis.

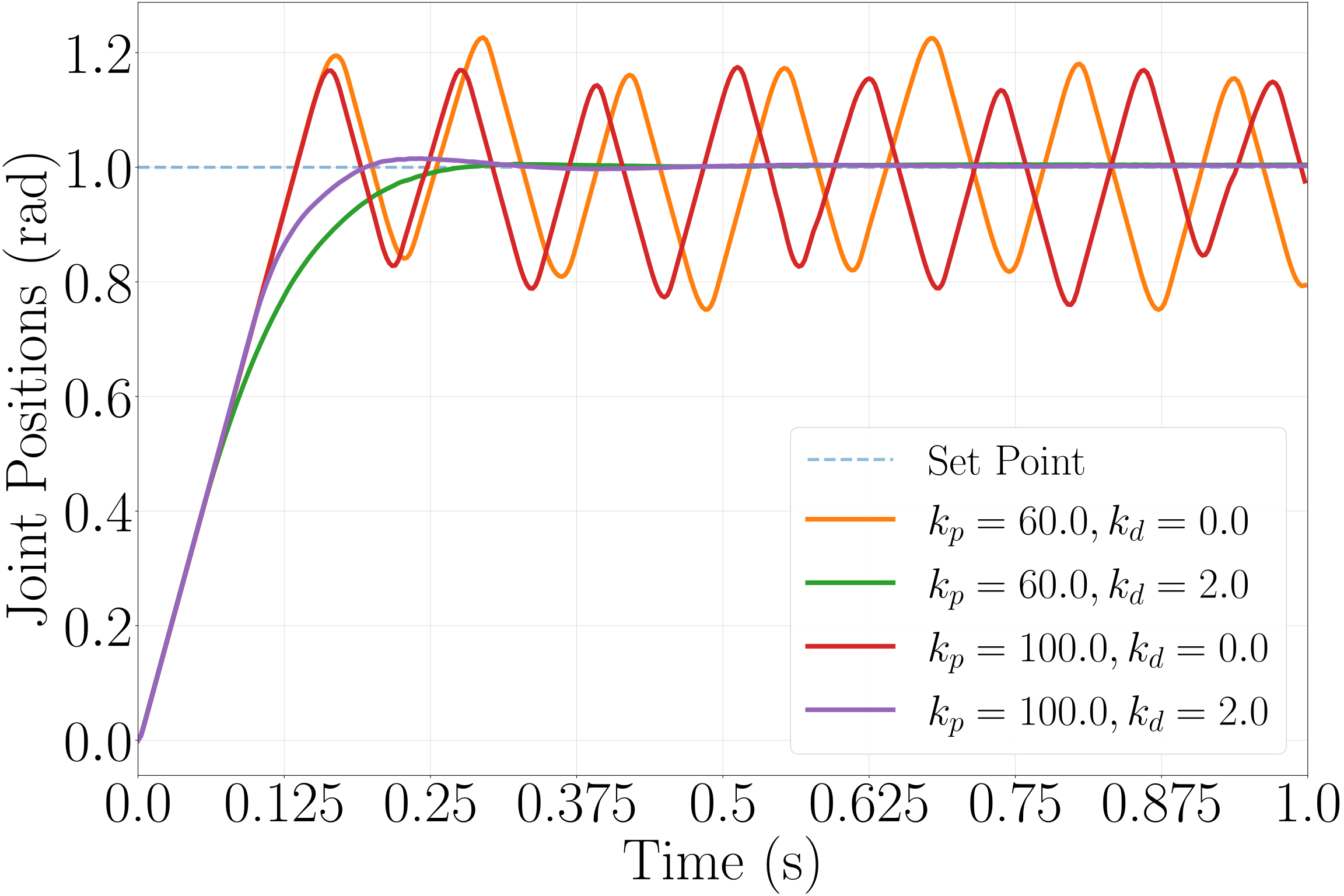

This low-frequency motion planning, however, requires that the underlying tracking controller is stable. Consider an example of step response observed for different actuation tracking gains.

As shown in the figure above, for extremely low damping (\(k_d=0\)), it is possible that the impedance controller is unable to converge to the desired set point resulting in an oscillatory behavior which introduces instabilities while tracking motion plans. In contrast, for low tracking gains, it is possible that the convergence to the desired set point may either be slow or may not even occur. For our experiments with the ANYmal C robot, we used \(k_p=80\) and \(k_d=2\).

In our experiments, we observed that training low-frequency motion policies did not require a well-designed training environment or state-space. Unlike in the case of Hwangbo et al.8 who trained a locomotion policy at 200 Hz and noted that a history of joint states in the state-space was essential to learn a stable locomotion policy, we were able to perform successful sim-to-real transfers for LFMCs without any dynamics randomization or introduction of history of joint states. For HFMCs, however, we observed an aggressive actuation tracking behavior resulting in significant vibrations around the joints of the quadruped. We additionally observed this behavior in some of the manipulation tasks, however, this is beyond the scope of the current work.

Locomotion Behavior

Here, we investigate the differences in locomotion behvaior of different policies. The clips below demonstrate the behavior a 10 Hz LFMC policy and a 100 HFMC policy following the same base velocity command.

Command Tracking: 10 Hz

Command Tracking: 100 Hz

For a basic environment setup, with no joint-state history in the state-space, we observe preference for larger foot-swing duration for LFMC policies compared to HFMC policies. This is further shown in the ground contact illustration below.

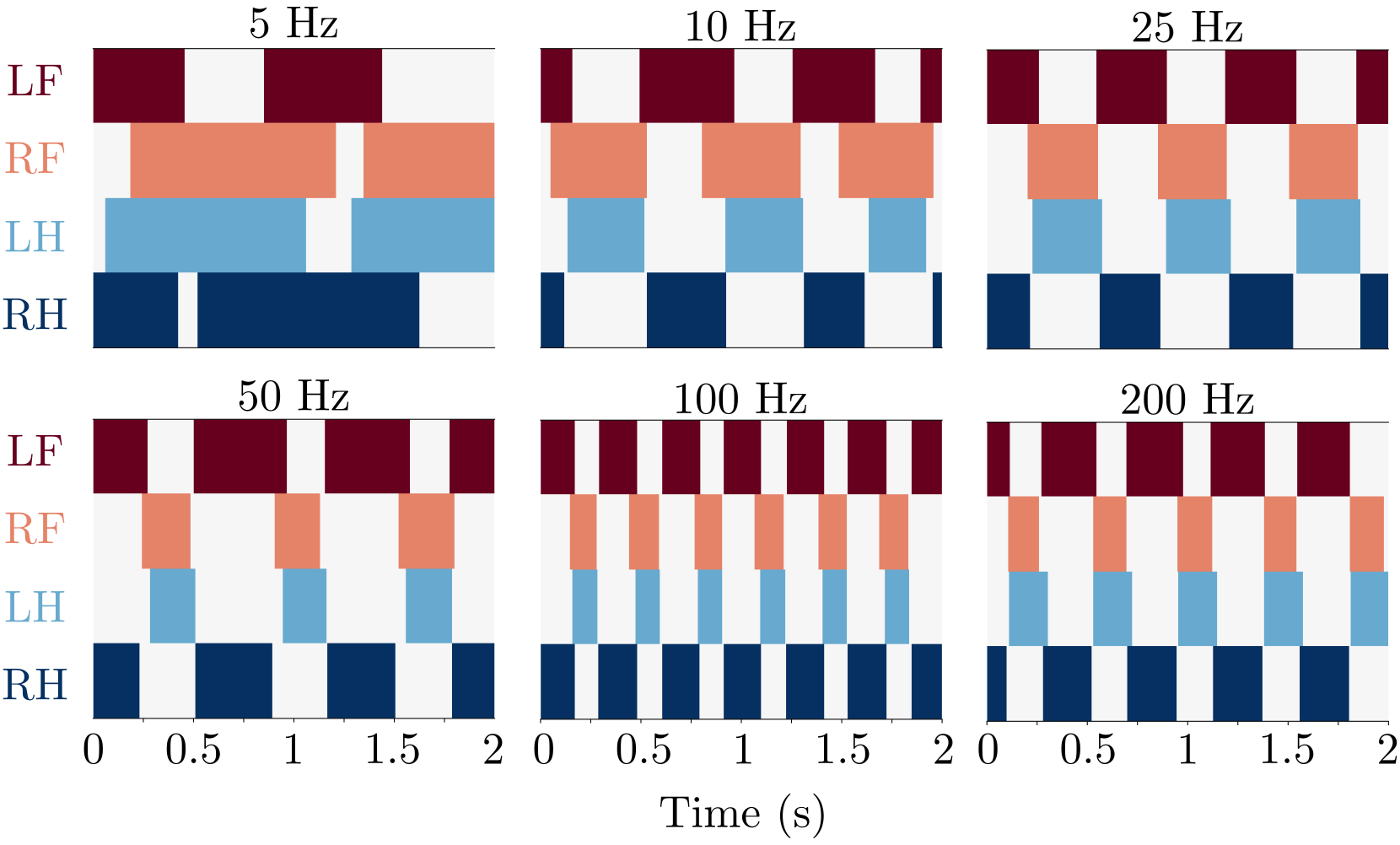

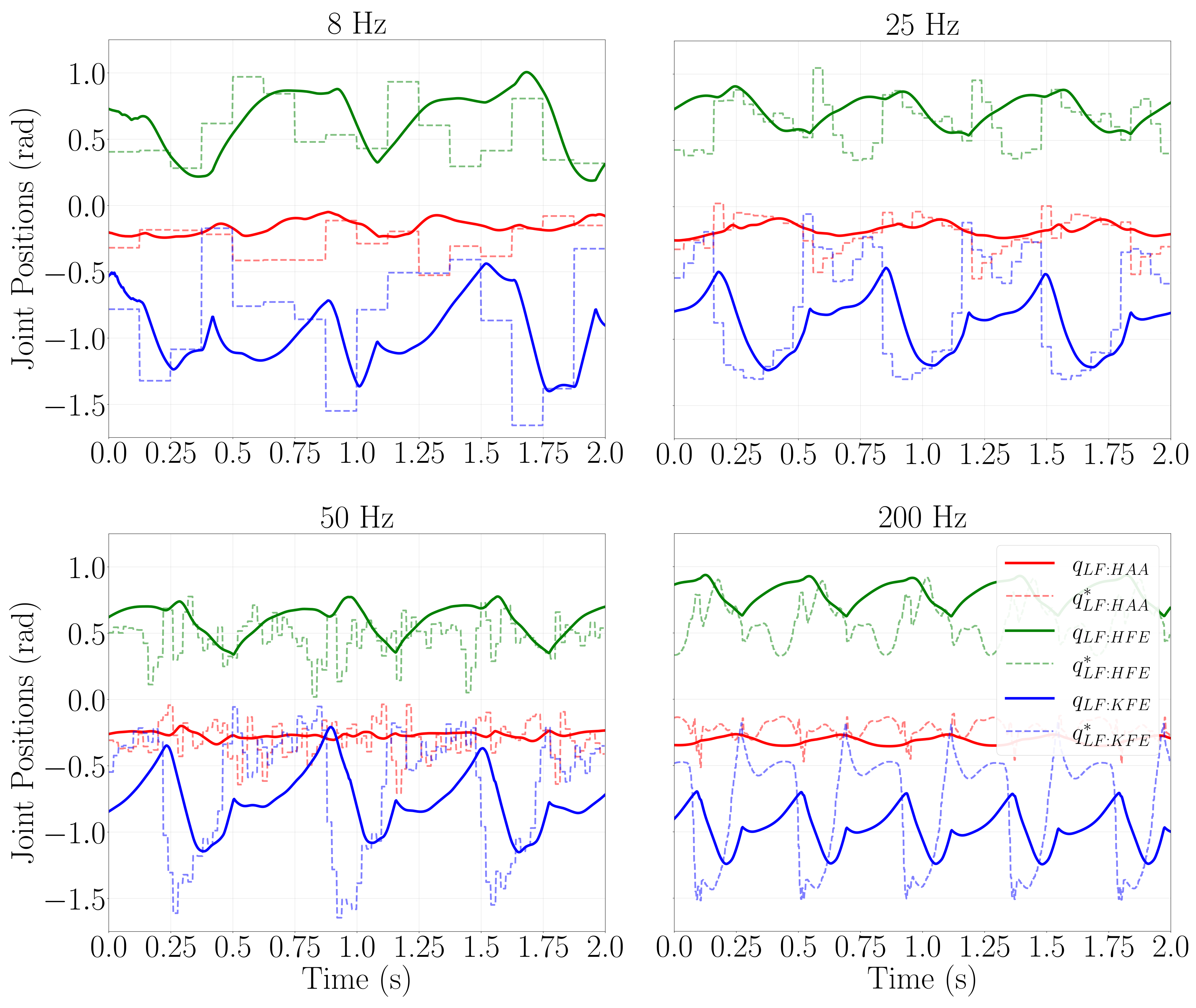

In the above figure, LF represents left-front foot and RH represents right-hind foot. The shaded regions correspond to the duration the robot feet are in contact with the ground. We suspect this behavior originates from the delays in updating desired joint positions for LFMC in comparison to HFMC. Since the state-space does not include a joint-state history and the policies are trained without dynamics randomization, we limit the ability of each of the policies to implicitly learn a system dynamics model. The policies therefore simply learn an oscillatory behavior. With delayed joint-state commands, the LFMC policies exhibit larger feet swing and stance durations. When joint-state history is introduced in the state-space, however, we observe a lot similar locomotion behavior for various frequencies. The figure below further demonstrates the tracking of desired joint position commands generated by locomotion policies at different frequencies.

Here, HAA represents the hip-adduction-abduction joint, HFE is the hip-flexion-extension joint, and KFE is the knee-flexion-extion joint. Also, \(\mathbf{{q}}_j\) is the measured joint position and \(\mathbf{{q}}^{\ast}_j\) is the desired joint position.

Robustness Analysis

According to the proposed motion planning hypothesis, the LFMC policies are less effected by the actuation dynamics compared to HFMC policies. We observe the ability of the policy to be robust to maximum synthetic joint command latencies before failure. In this case, failure is considered to be the state where the robot base drops down and collides either with the ground or robot feet. The latency is measured at a resolution of 200 Hz (5ms).

| Training Frequency (Hz) | Latency (ms) |

|---|---|

| 5 | 90 |

| 8 | 90 |

| 10 | 90 |

| 25 | 65 |

| 50 | 50 |

| 100 | 30 |

| 200 | 20 |

We also test the actuation tracking frequency at which the motion controller fails to locomote. We observe that the 10 Hz motion control policy is able to walk without falling over even at an actuation tracking frequency of 13 Hz. However, the locomotion behavior with a motion control frequency of 10 Hz and actuation tracking frequency of less than 25 Hz is not suited for transfer to real robot since we observe signficant oscillations during position tracking.

Actuation Tracking Frequency of (first) 10 Hz, (second) 13 Hz, and (third) 16 Hz. [Order: Left to Right and Top to Bottom]

Actuation Tracking Frequency of (first) 20 Hz, (second) 25 Hz, and (third) 30 Hz. [Order: Left to Right and Top to Bottom]

Actuation Tracking Frequency of (first) 40 Hz, (second) 80 Hz, and (third) 200 Hz. [Order: Left to Right and Top to Bottom]

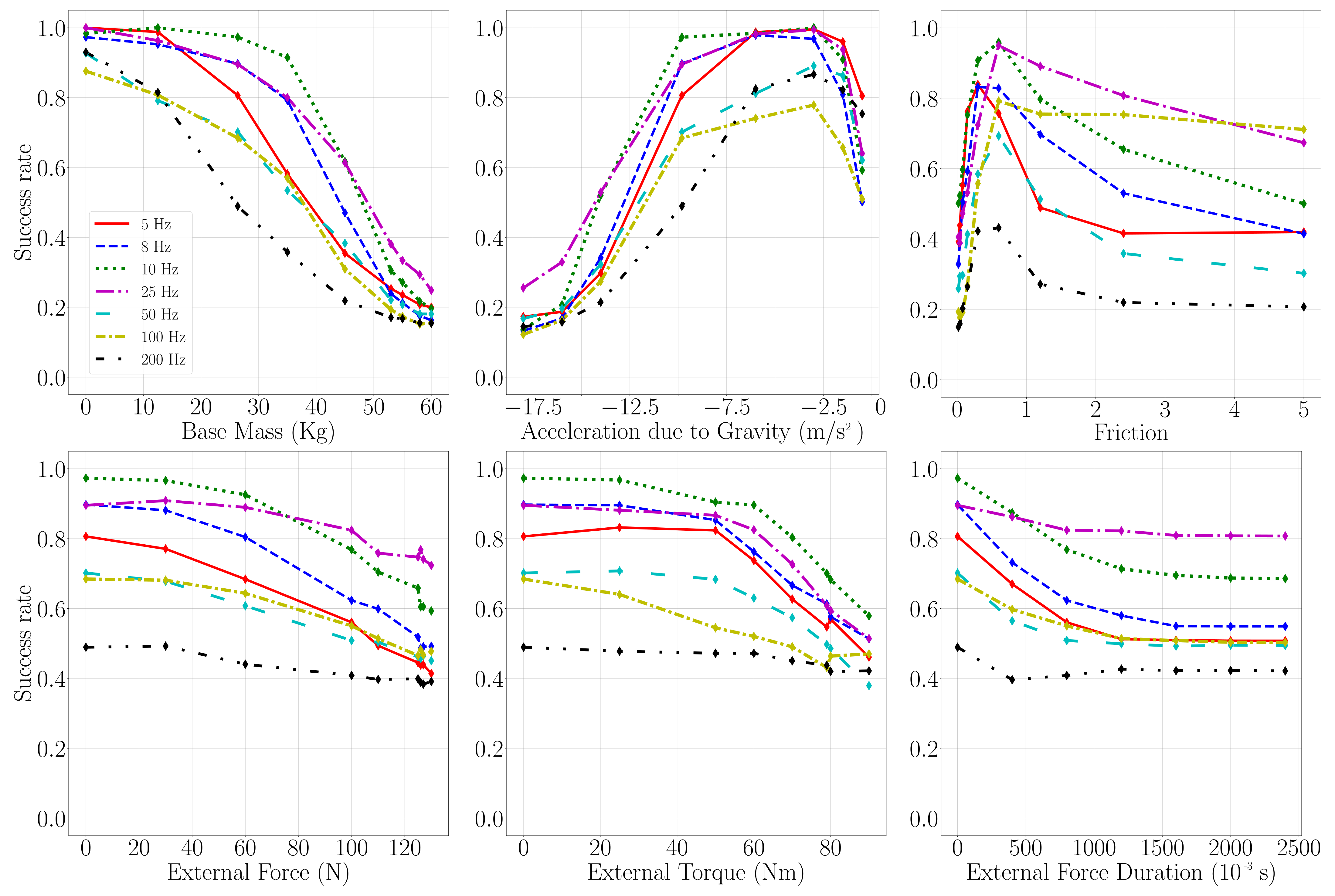

In addition to robustness to actuation dynamics, we test the ability of LFMC policies to be robust to changes in system and environment dynamics. For this, we vary robot mass, gravity and terrain friction and measure the robot's success rate for traversal over rough terrain as shown below.

We also test the reactivity of the policies by perturbing the robot base. We define the performance metric (success rate) as

where \(N_e\) refers to the number of episodic rollouts that were terminated early due to an invalid robot state and \(N_T\) represents the total number of rollouts. Here, we use \(N_T=100\). An invalid robot state is defined by the criteria:

- \(\arccos{(\mathbf{R}_{B_{3,3}})} > 0.4\pi\) which relates to base roll and pitch magnitude exceeding a threshold,

- self-collisions, or

- collision of the robot base with ground.

The figure below demonstrates the ability of motion control policies trained at different frequencies to be robust to variations in dynamics and external perturbations.

LFMC policies offer better robustness. However, for motion control frequencies less than 8 Hz, we observe poor reactivity which affects their success rate. Overall, we observed the 25 Hz motion control frequency offered the best balance of reactivity and robustness. We do expect additional environment and reward tuning to improve the performance of 10 Hz and lower frequency policies.

-

A Aldo Faisal, Luc PJ Selen, and Daniel M Wolpert. Noise in the nervous system. Nature reviews neuroscience, 9(4):292–303, 2008. ↩

-

Heather L More, John R Hutchinson, David F Collins, Douglas J Weber, Steven KH Aung, and J Maxwell Donelan. Scaling of sensorimotor control in terrestrial mammals. Proceedings of the Royal Society B: Biological Sciences, 277(1700):3563–3568, 2010. ↩

-

Heather L More and J Maxwell Donelan. Scaling of sensorimotor delays in terrestrial mammals. Proceedings of the Royal Society B, 285(1885):20180613, 2018. ↩

-

Milad Shafiee Ashtiani, Alborz Aghamaleki Sarvestani, and Alexander Badri-Spröwitz. Hybrid parallel compliance allows robots to operate with sensorimotor delays and low control frequencies. Frontiers in Robotics and AI, pages 170, 2021. ↩

-

John EA Bertram, Anne Gutmann, Jabina Randev, and Manuel Hulliger. Domestic cat walking parallels human constrained optimization: optimization strategies and the comparison of normal and sensory deficient individuals. Human Movement Science, 36:154–166, 2014. ↩

-

Azhar Aulia Saputra, Naoyuki Takesue, Kazuyoshi Wada, Auke Jan Ijspeert, and Naoyuki Kubota. Aquro: a cat-like adaptive quadruped robot with novel bio-inspired capabilities. Frontiers in Robotics and AI, 8:562524, 2021. ↩

-

John Schulman, Filip Wolski, Prafulla Dhariwal, Alec Radford, and Oleg Klimov. Proximal policy optimization algorithms. 2017. arXiv:1707.06347. ↩

-

Jemin Hwangbo, Joonho Lee, Alexey Dosovitskiy, Dario Bellicoso, Vassilios Tsounis, Vladlen Koltun, and Marco Hutter. Learning agile and dynamic motor skills for legged robots. Science Robotics, 4(26):eaau5872, 2019. ↩