Usage

The following demonstrates a number of example uses of the dataset for different robot navigation research topics:

- LIDAR SLAM

- Visual Appearance-based Loop Closure

- 3D LIDAR Reconstruction

- Visual Odometry (including VINS or Visual-Inertial Odometry)

For a quick summary watch this two minute video:

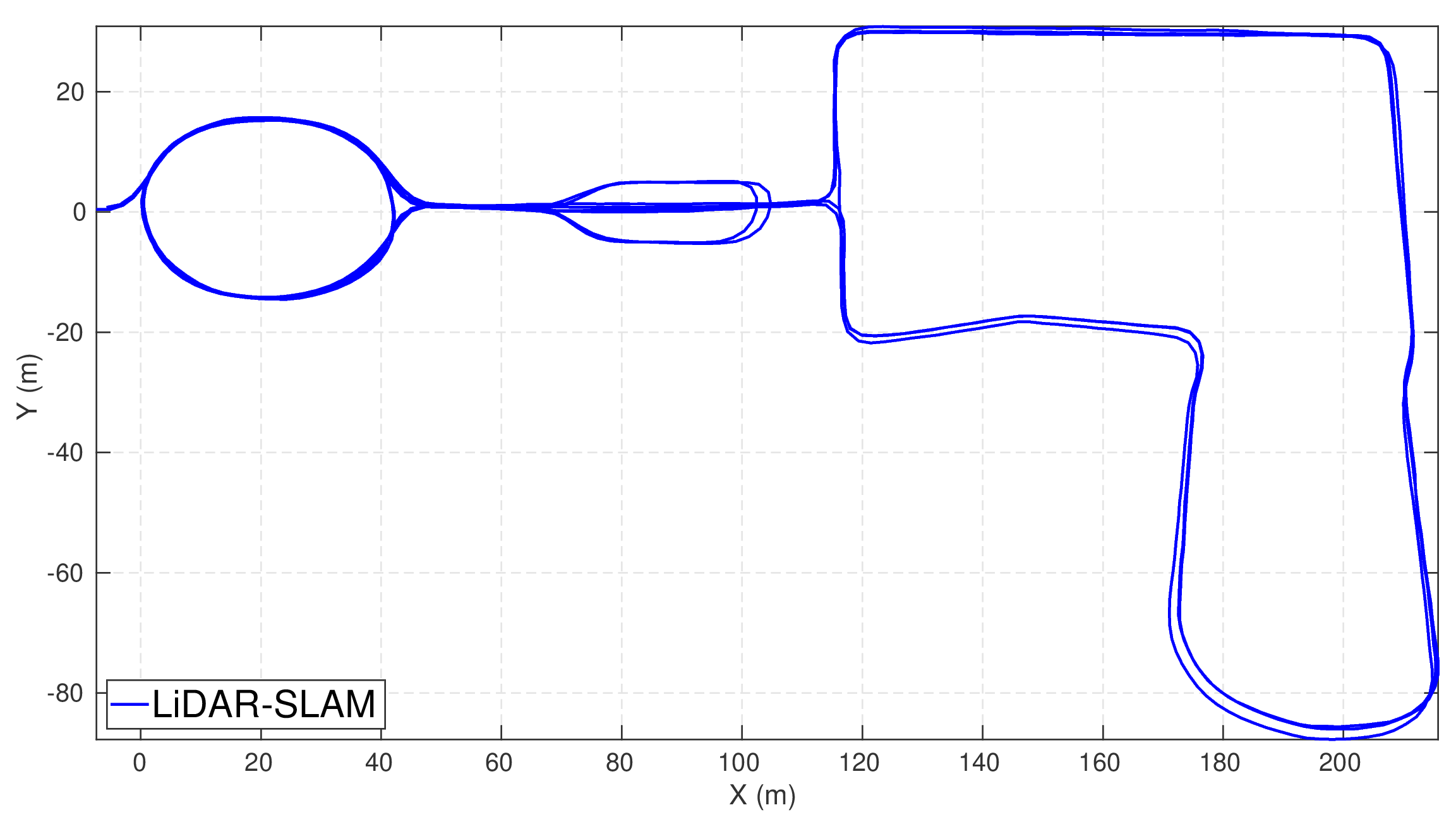

LIDAR SLAM

We used our LiDAR SLAM system [Ramezani 2020] to demonstrate 3D real-time mapping. We estimate LIDAR ego-motion at 2 Hz using a light coupling of visual odometry [Huang 2011] and LIDAR odometry [Pomerleau 2013] and find loop-closures geometrically. A pose graph optimization is solved using GTSAM (The first image below shows the trajectory for the entire dataset and a second shows an example point cloud reconstruction).

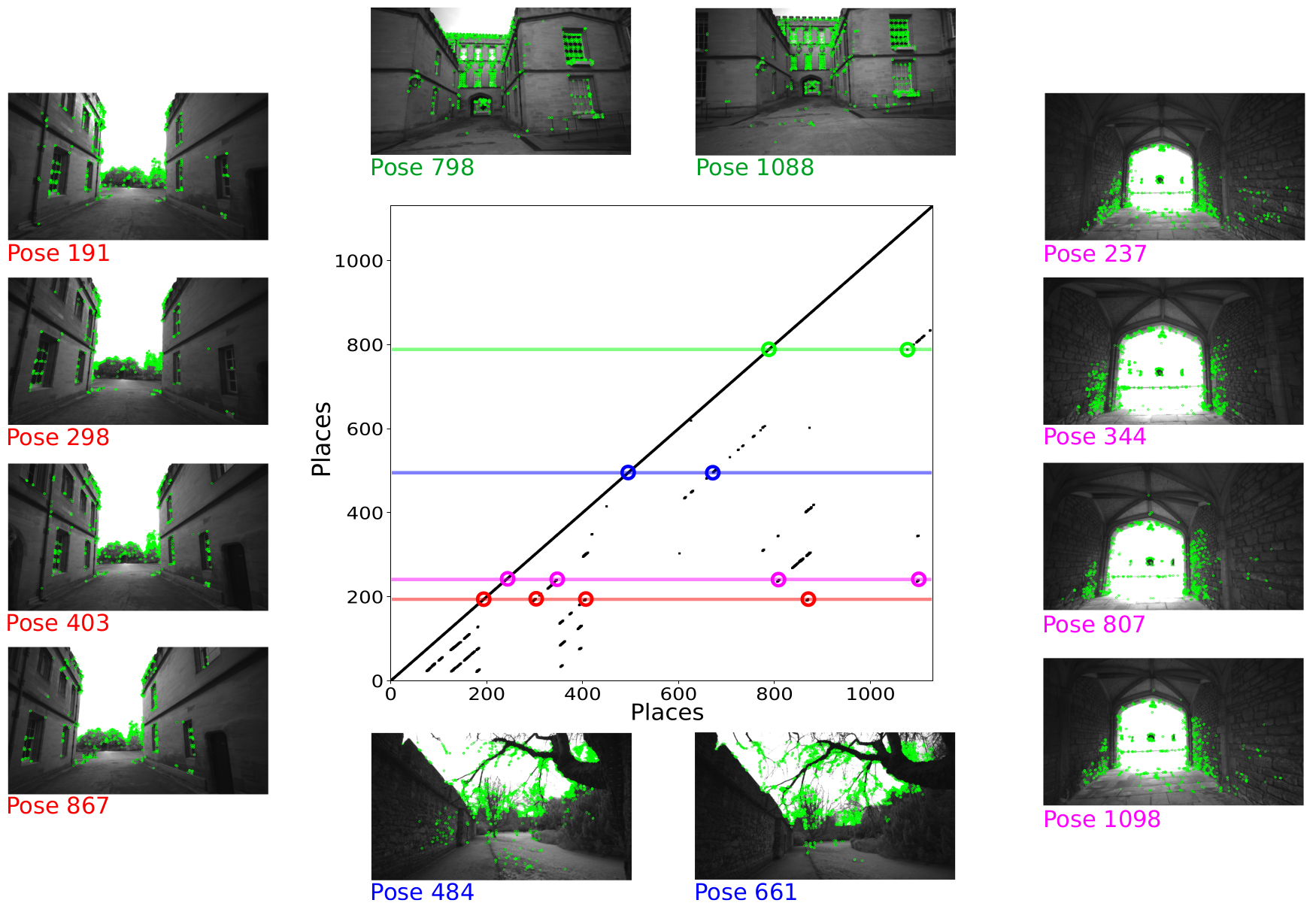

Visual Appearance-based Loop Closure

The following image shows the dataset used for visual place recognition. Taking a sequence of poses (or poses) from the ground truth spaced 2m apart, the centre image (a simularity matrix) was computed. Entries in the matrix correspond to two places which look similar and ideally are the same physical location.

We used DBow2 [Galvez-Lopez 2012] with ORB features [Rublee 2011] to determine the 10 most similar previous images to the current image. Some examples of the most similar views captured at different times are shown.

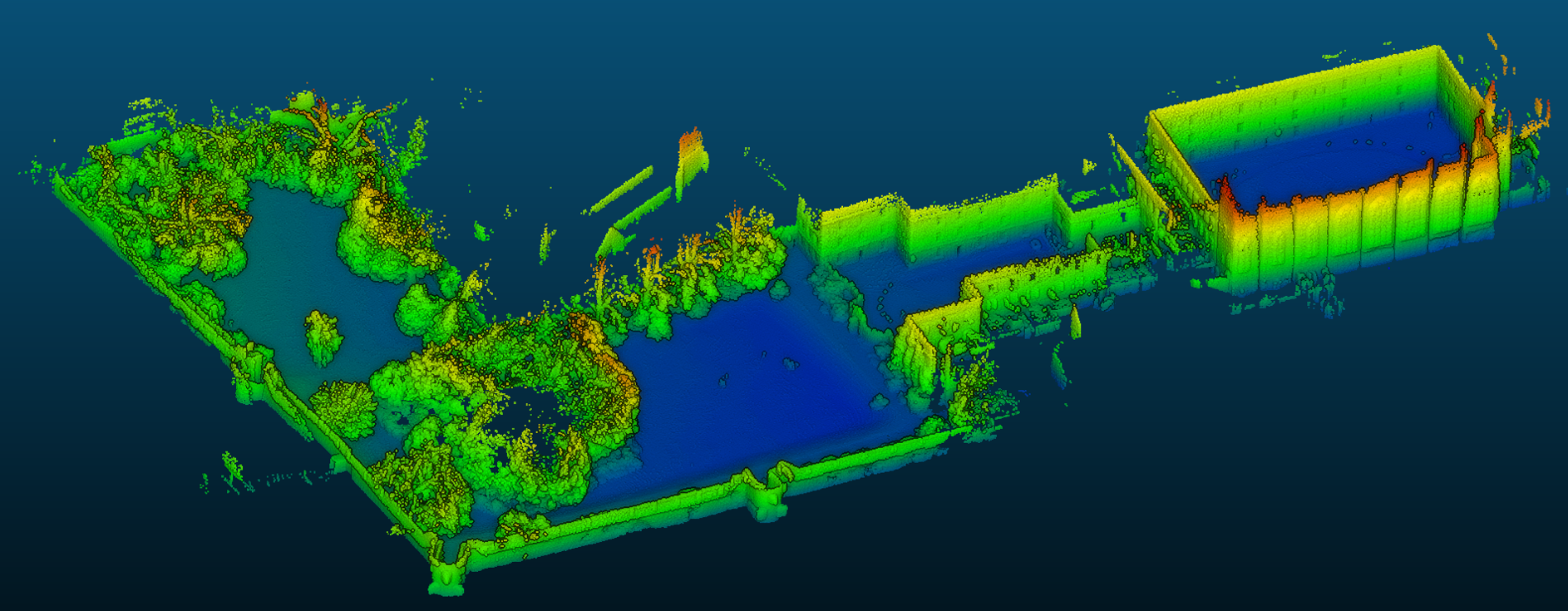

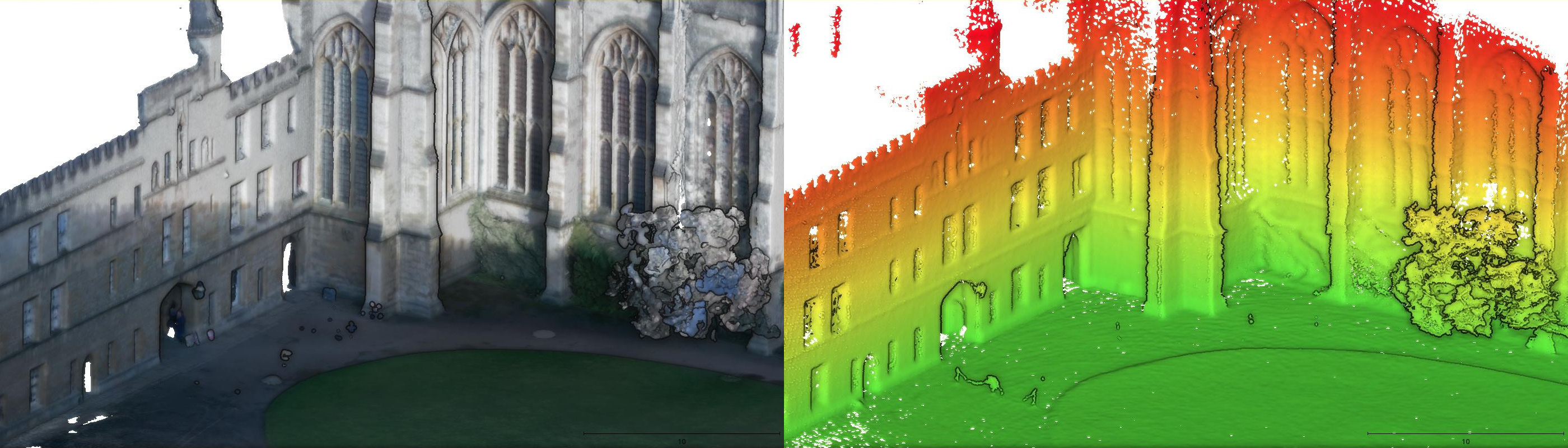

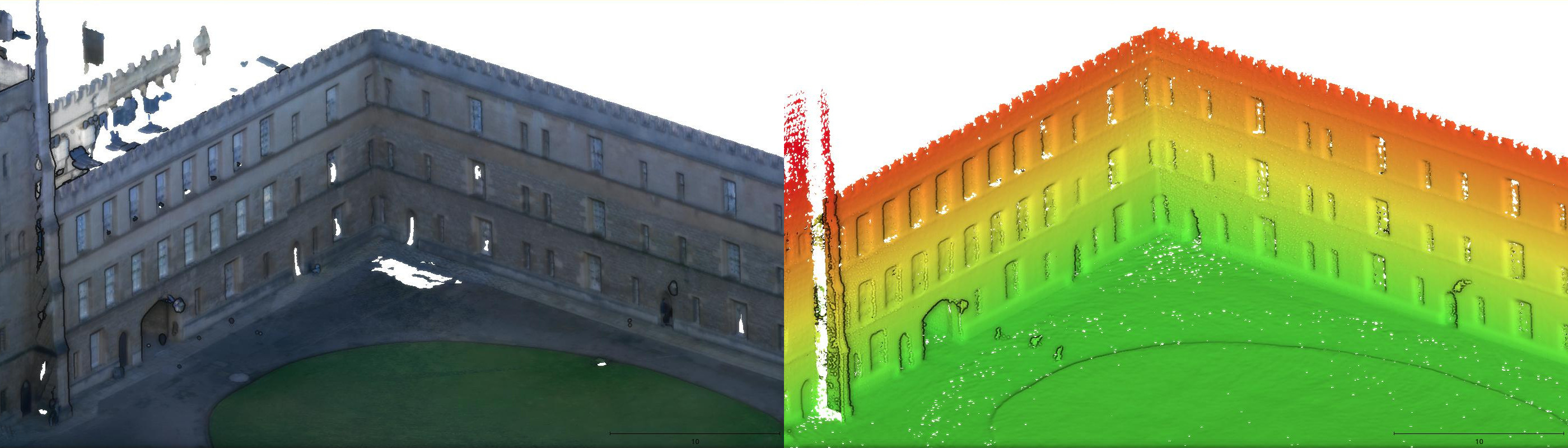

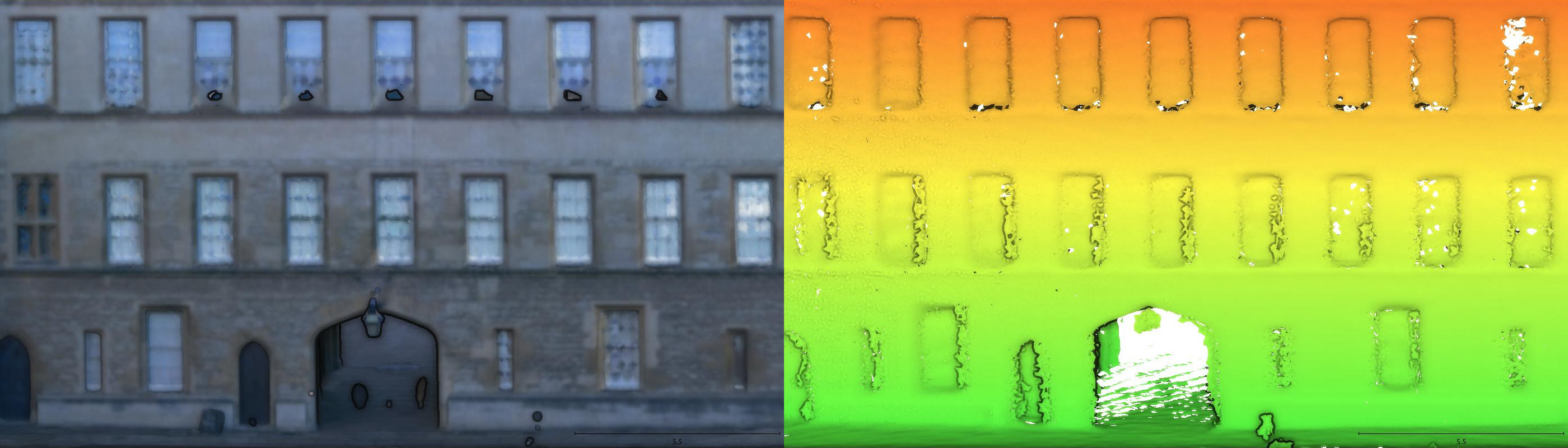

3D LIDAR Reconstruction

The following views show the point cloud from the Leica BLK scanner (left) and reconstructions made using the Ouster LIDAR scans.

The Ouster-based reconstructions use the ground truth poses of the sensor to project the data into 3D. The filter chain for the reconstruction uses moving least squares smoothing [Alexa 2003], voxel grid filtering and greed triangulation projection [Marton 2009] which are all implemented in the Point Cloud Library (PCL).

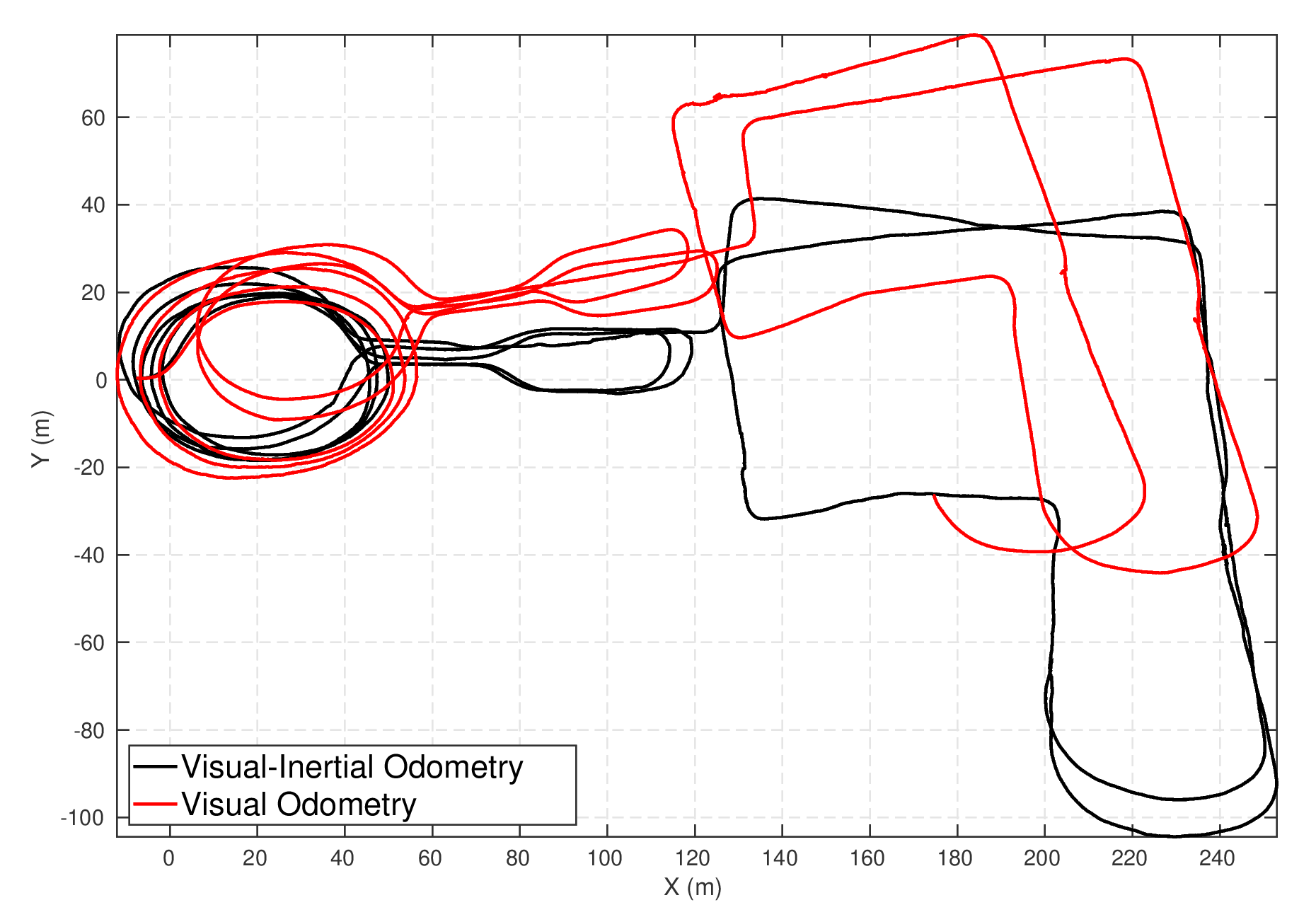

Visual Odometry

We tested ORB-SLAM2 [Mur-Artal 2019] as a standard stereo odometry algorithm (with loop closures disabled) and our own approach to visual-inertial odometry, VILENS [Wisth 2019], which carries out windowed smoothing of both the stereo images and the IMU measurements.

The figure below shows the trajectories estimated by the two approaches using the image pairs for the first 1500 seconds of the dataset.

We provide visual and inertial measurements which are software time synchronized as well as providing the calibration files for use with Kalibr [Furgale 2013].

Multi-Camera Visual Odometry

With the addition of the newly added multi-camera dataset, we developed a multi-camera visual-inertial odometry system (VILENS-MC) [Zhang 2021]. The system is based on factor graph optimization which estimates motion by using all cameras simultaneously while retaining a fixed overall feature budget. We focus on motion tracking in challenging environments which cause traditional monocular or stereo odometry to fail. To achieve real-time prformance we track features as they move from one camera to another and maintain a fixed budget of tracked features across the cameras.

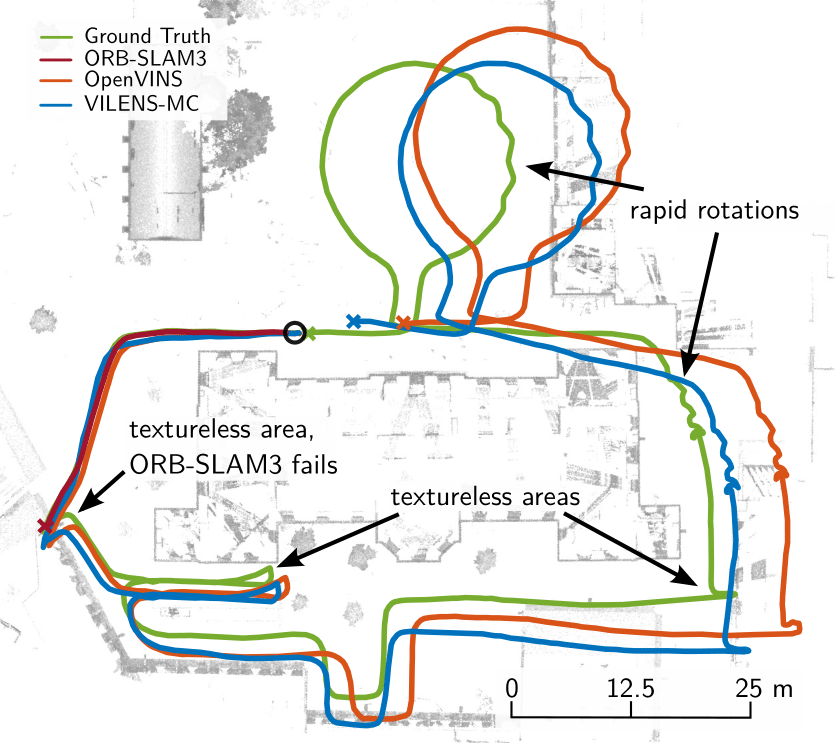

The figure below shows the trajectories estimated by OpenVINS [Geneva 2019], ORBSLAM-3 [Campos 2021] and VILENS-MC for the Math-Hard dataset.