LIDAR Place Recognition

Localization using LIDAR has advantages over visual localization. In particular LIDAR has a great degree of viewpoint and lighting invariance. It is however less informative. We have developed machine learning algorithms to more reliably detect places using segments as a basic unit within point cloud.

Efficient Segmentation and Mapping (ESM, RA-L 2019)

Abstract: Localization in challenging, natural environments such as forests or woodlands is an important capability for many applications from guiding a robot navigating along a forest trail to monitoring vegetation growth with handheld sensors. In this work we explore laser-based localization in both urban and natural environments, which is suitable for online applications. We propose a deep learning approach capable of learning meaningful descriptors directly from 3D point clouds by comparing triplets (anchor, positive and negative examples). The approach learns a feature space representation for a set of segmented point clouds that are matched between a current and previous observations. Our learning method is tailored towards loop closure detection resulting in a small model which can be deployed using only a CPU. The proposed learning method would allow the full pipeline to run on robots with limited computational payload such as drones, quadrupeds or UGVs.

PCA visualization of the feature space after training. Each sample represents a point cloud segment in 3D space, coloured by class.

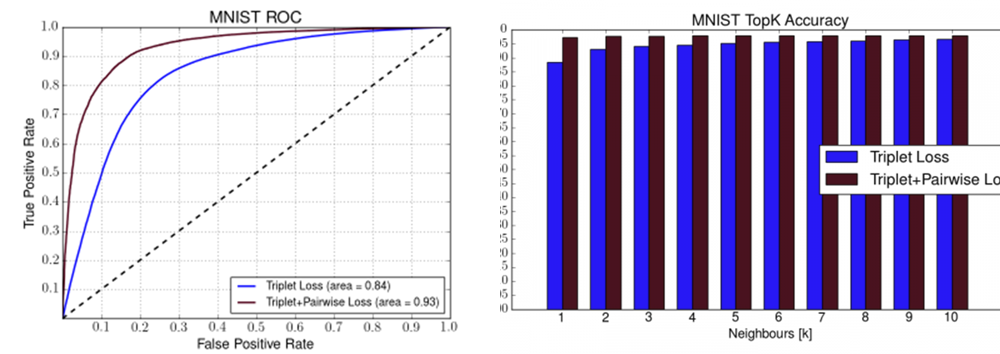

Supplementary Material – Loss Evaluation

We have trained two models of our network in order to understand the impact of each loss. We have trained the network on the MNIST dataset with 1) triplet loss only and 2) with both triplet and pairwise losses. As our network requires raw point clouds instead of images, thus we have transformed the images of the digits to point clouds with Z=0 (planar surface) and sampling uniformly 256 points from the white parts of the images (white represents the digit, the background is always black). The figures below present the ROC curve and the TopK accuracy evaluated on the unseen portion of the MNIST dataset.

Publication

“Learning to See the Wood for the Trees: Deep Laser Localization in Urban and Natural Environments on a CPU”, Georgi Tinchev, Adrian Penate-Sanchez, Maurice Fallon, IEEE Robotics and Automation Letters, 2019.

“Learning to See the Wood for the Trees: Deep Laser Localization in Urban and Natural Environments on a CPU”, Georgi Tinchev, Adrian Penate-Sanchez, Maurice Fallon, IEEE Robotics and Automation Letters, 2019.

Natural Segmentation and Mapping (NSM, RA-L 2019)

Abstract: In this work we introduce Natural Segmentation and Matching (NSM), an algorithm for reliable localization, using laser, in both urban and natural environments. Current state-of-the-art global approaches do not generalize well to structure-poor vegetated areas such as forests or orchards where clutter and perceptual aliasing prevents reliable extraction of repeatable and distinctive landmarks to be matched between different test runs. In natural forests, tree trunks are not distinct, foliage intertwines and there is a complete lack of planar structure.

In this paper we propose a method for place recognition which uses a more involved feature extraction process which is better suited to this environment. First, a feature extraction module segments stable and reliable object-sized segments from a point cloud despite the presence of heavy clutter or tree foliage. Second, repeatable oriented keyframes are extracted and matched with a reliable shape descriptor using a Random Forest to estimate the current sensor’s position within the target map. We present qualitative and quantitative evaluation on three datasets from different environments – the KITTI benchmark, a parkland scene and a foliage-heavy forest.

The experiments show how our approach can achieve place recognition in woodlands while also outperforming current state-of-the-art approaches in urban scenarios without specific tuning.

Publication