SiLVR: Scalable Lidar-Visual Radiance Field Reconstruction with Uncertainty Quantification

Accepted at the IEEE Transactions on Robotics (T-RO)

SiLVR is a scalable lidar-visual radiance field reconstruction framework with epistemic uncertainty quantification.

Abstract

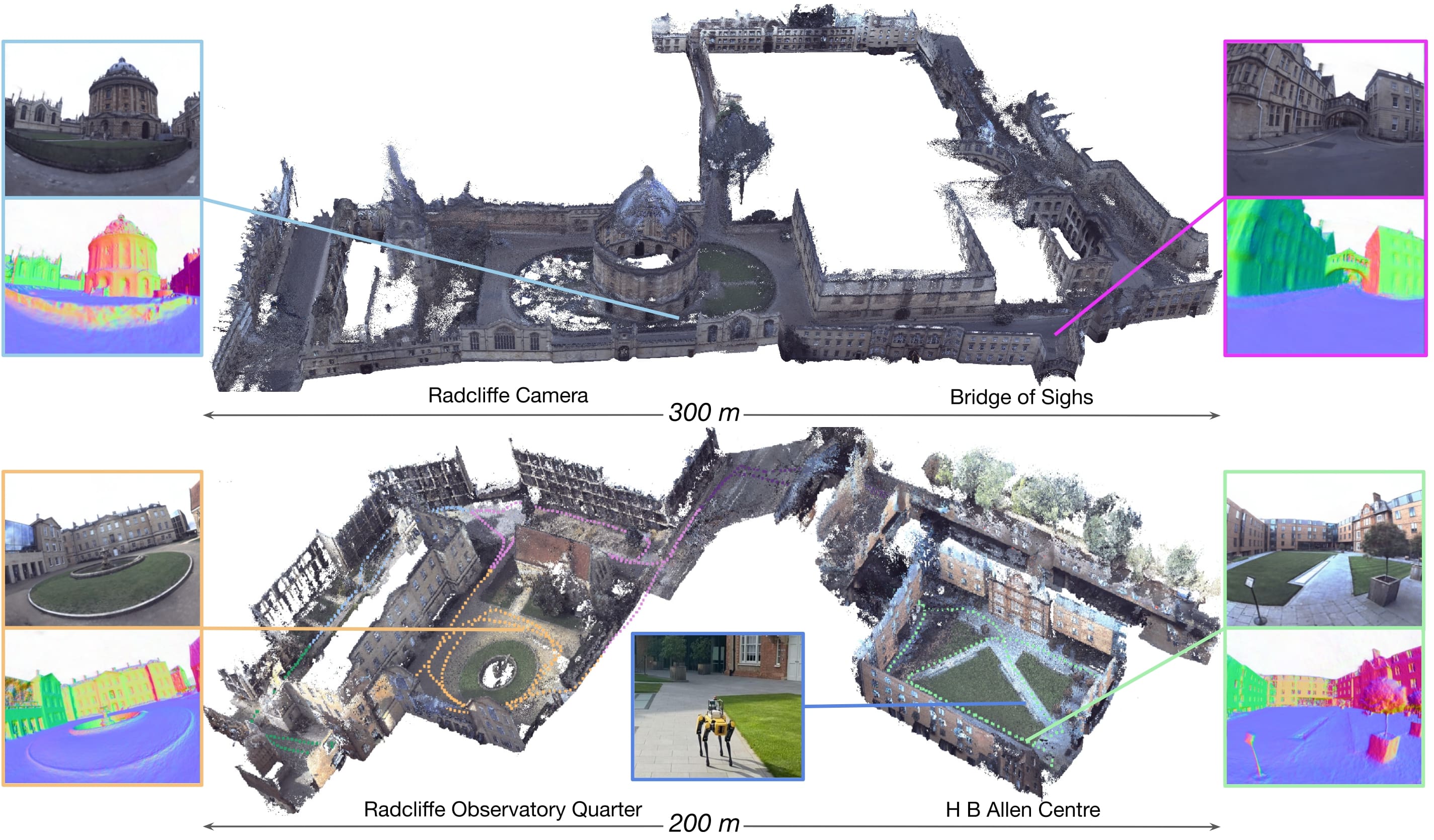

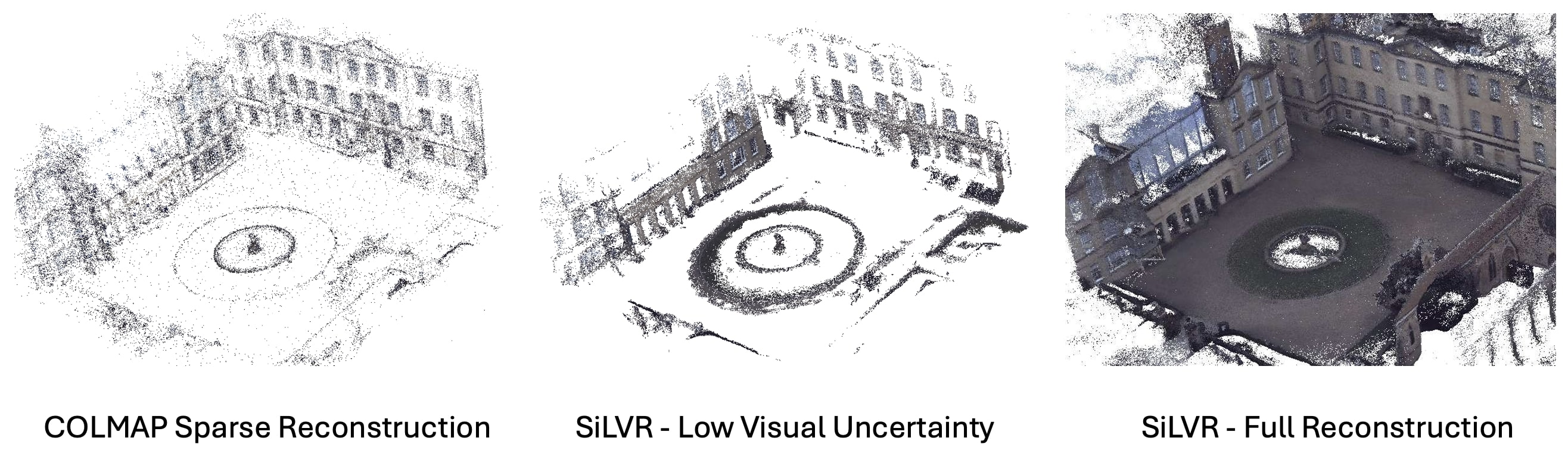

We present a neural radiance field (NeRF) based large-scale reconstruction system that fuses lidar and vision data to generate high-quality reconstructions that are geometrically accurate and capture photorealistic texture. Our system adopts the state-of-the-art NeRF representation to additionally incorporate lidar. Adding lidar data adds strong geometric constraints on the depth and surface normals, which is particularly useful when modelling uniform texture surfaces which contain ambiguous visual reconstruction cues. A key contribution of this work is a novel method to quantify the epistemic uncertainty of the lidar-visual NeRF reconstruction by estimating the spatial variance of each point location in the radiance field given the sensor observations from the cameras and lidar. This provides a principled approach to evaluate the contribution of each sensor modality to the final reconstruction. In this way, reconstructions that are uncertain (due to, e.g., uniform visual texture, limited observation viewpoints, or little lidar coverage) can be identified and removed. Our system is integrated with a real-time pose-graph lidar SLAM system which is used to bootstrap a Structure-from-Motion (SfM) reconstruction procedure. It also helps to properly constrain the overall metric scale which is essential for the lidar depth loss. The refined SLAM trajectory can then be divided into submaps using Spectral Clustering to group sets of co-visible images together. This submapping approach is more suitable for visual reconstruction than distance-based partitioning. Our uncertainty estimation is particularly effective when merging submaps, as their boundaries often contain artefacts due to limited observations. We demonstrate the reconstruction system using a multi-camera, lidar sensor suite in experiments involving both robot-mounted and handheld scanning. Our test datasets cover a total area of more than 20,000 m^2, including multiple university buildings and an aerial survey of a multi-storey building. Quantitative evaluation is provided by comparing with maps produced by a commercial tripod scanner.

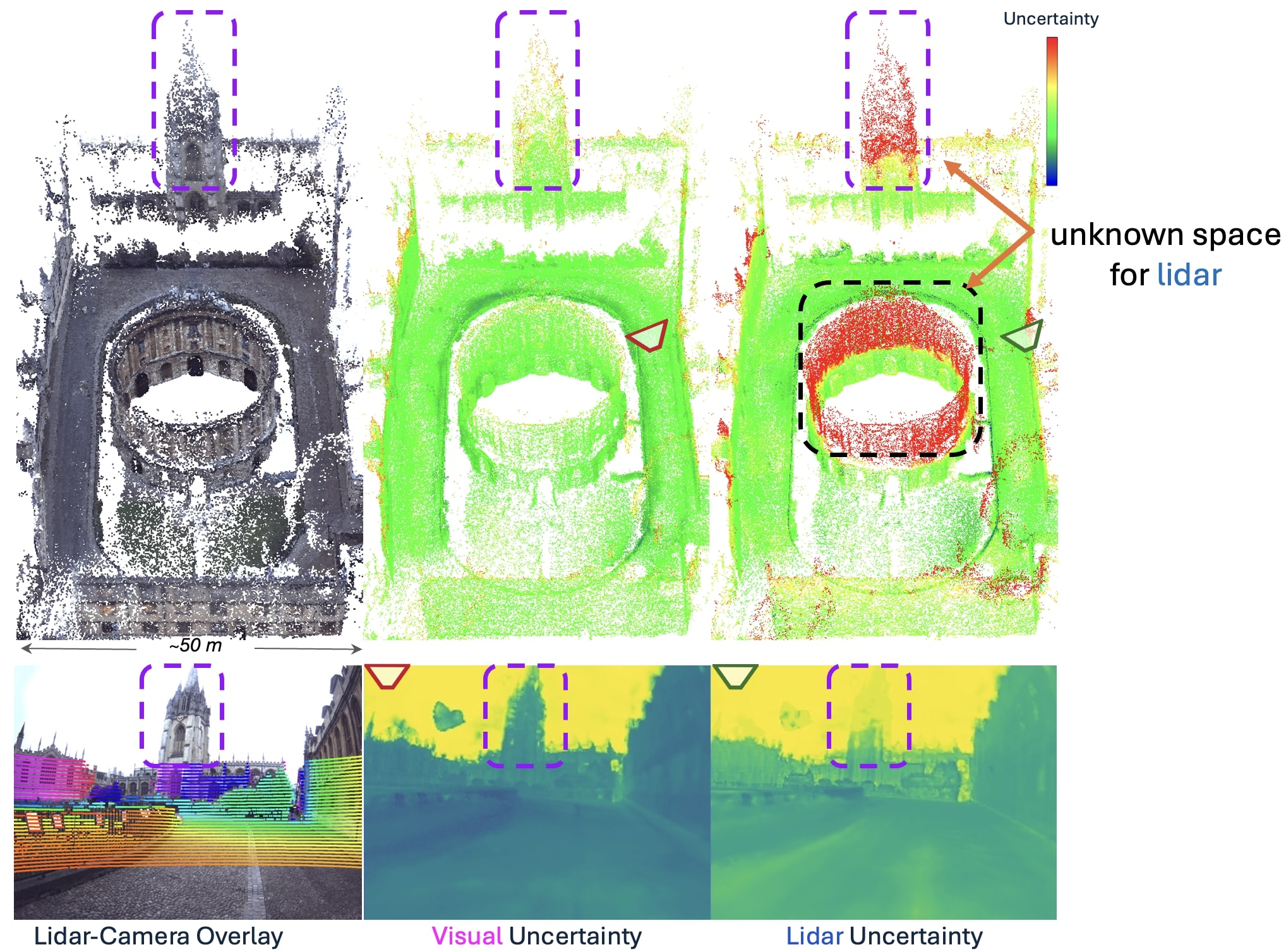

Epistemic Uncertainty Estimation

SiLVR resembles notions of uncertainty in classical visual reconstruction framework (e.g. Structure-from-Motion). It identifies regions with visual features (e.g. edges of the fountain below) as having low visual uncertainty, and regions without visual features (e.g. the textureless ground below) as having high visual uncertainty.

SiLVR also resembles notions of uncertainty in classical range-measurement-based mapping framework (e.g. Occupancy Mapping). It identifies space not observed by lidar (e.g. top of the buildings below), i.e., the unknown space, as having high lidar (depth) uncertainty.

Citation

@article{tao2025silvr,

title={SiLVR: Scalable Lidar-Visual Radiance Field Reconstruction with Uncertainty Quantification},

author={Tao, Yifu and Fallon, Maurice},

journal={IEEE Transactions on Robotics},

year={2025}

}